[ad_1]

A visitor submit by Neurons Lab

Please observe that the knowledge, makes use of, and functions expressed within the beneath submit are solely these of our visitor writer, Neurons Lab, and never essentially these of Google.

How the concept emerged

With the development of know-how, drones have turn into not solely smaller, but in addition have extra compute. There are a lot of examples of iPhone-sized quadcopters within the client drone market and the computing energy to do stay monitoring whereas recording 4K video. Nevertheless, an important ingredient has not modified a lot – the controller. It’s nonetheless cumbersome and never intuitive for novices to make use of. There’s a smartphone with on-display management as an choice; nonetheless, the management precept continues to be the identical.

That’s how the concept for this venture emerged: a extra personalised method to regulate the drone utilizing gestures. ML Engineer Nikita Kiselov (me) along with session from my colleagues at Neurons Lab undertook this venture.

Determine 1: [GIF] Demonstration of drone flight management by way of gestures utilizing MediaPipe Arms

Why use gesture recognition?

Gestures are essentially the most pure means for folks to specific info in a non-verbal means. Gesture management is a complete subject in pc science that goals to interpret human gestures utilizing algorithms. Customers can merely management gadgets or work together with out bodily touching them. These days, such varieties of management may be discovered from sensible TV to surgical procedure robots, and UAVs usually are not the exception.

Though gesture management for drones haven’t been extensively explored currently, the method has some benefits:

- No further tools wanted.

- Extra human-friendly controls.

- All you want is a digicam that’s already on all drones.

With all these options, such a management technique has many functions.

Flying motion digicam. In excessive sports activities, drones are a classy video recording software. Nevertheless, they have a tendency to have a really cumbersome management panel. The flexibility to make use of fundamental gestures to regulate the drone (whereas in motion) with out reaching for the distant management would make it simpler to make use of the drone as a selfie digicam. And the power to customize gestures would fully cowl all the mandatory actions.

This sort of management as a substitute can be useful in an industrial surroundings like, for instance, building circumstances when there could also be a number of drone operators (gesture can be utilized as a stop-signal in case of dropping major supply of management).

The Emergencies and Rescue Providers might use this technique for mini-drones indoors or in hard-to-reach locations the place one of many palms is busy. Along with the impediment avoidance system, this might make the drone absolutely autonomous, however nonetheless manageable when wanted with out further tools.

One other space of utility is FPV (first-person view) drones. Right here the digicam on the headset might be used as an alternative of 1 on the drone to recognise gestures. As a result of hand motion may be impressively exact, this sort of management, along with hand place in house, can simplify the FPV drone management ideas for brand spanking new customers.

Nevertheless, all these functions want a dependable and quick (actually quick) recognition system. Current gesture recognition programs may be basically divided into two foremost classes: first – the place particular bodily gadgets are used, comparable to sensible gloves or different on-body sensors; second – visible recognition utilizing varied varieties of cameras. Most of these options want further {hardware} or depend on classical pc imaginative and prescient methods. Therefore, that’s the quick resolution, however it’s fairly laborious so as to add customized gestures and even movement ones. The reply we discovered is MediaPipe Arms that was used for this venture.

General venture construction

To create the proof of idea for the acknowledged thought, a Ryze Tello quadcopter was used as a UAV. This drone has an open Python SDK, which enormously simplified the event of this system. Nevertheless, it additionally has technical limitations that don’t enable it to run gesture recognition on the drone itself (but). For this objective a daily PC or Mac was used. The video stream from the drone and instructions to the drone are transmitted by way of common WiFi, so no further tools was wanted.

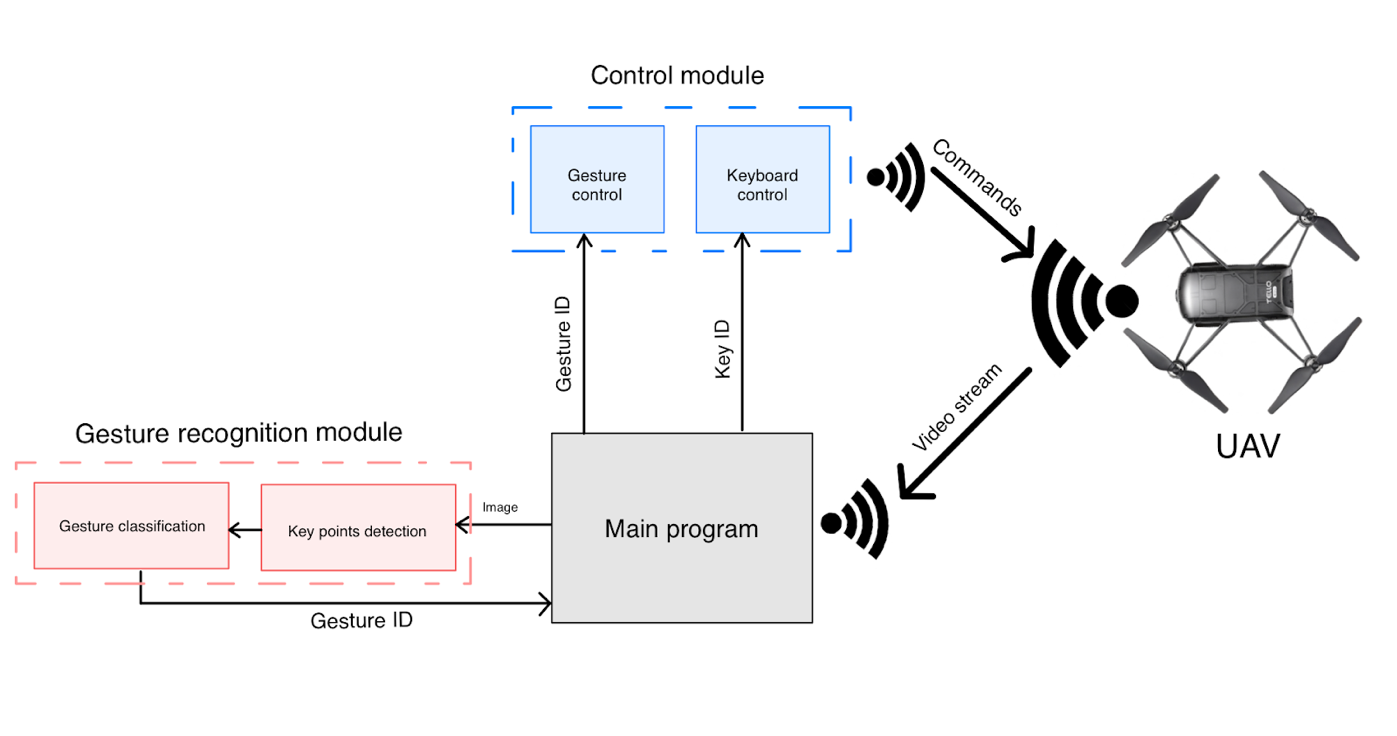

To make this system construction as plain as doable and add the chance for simply including gestures, this system structure is modular, with a management module and a gesture recognition module.

Determine 2: Scheme that reveals total venture construction and the way videostream knowledge from the drone is processed

The appliance is split into two foremost components: gesture recognition and drone controller. These are unbiased cases that may be simply modified. For instance, so as to add new gestures or change the motion pace of the drone.

Video stream is handed to the principle program, which is a straightforward script with module initialisation, connections, and typical for the {hardware} while-true cycle. Body for the videostream is handed to the gesture recognition module. After getting the ID of the recognised gesture, it’s handed to the management module, the place the command is shipped to the UAV. Alternatively, the person can management a drone from the keyboard in a extra classical method.

So, you possibly can see that the gesture recognition module is split into keypoint detection and gesture classifier. Precisely the bunch of the MediaPipe key level detector together with the customized gesture classification mannequin distinguishes this gesture recognition system from most others.

Gesture recognition with MediaPipe

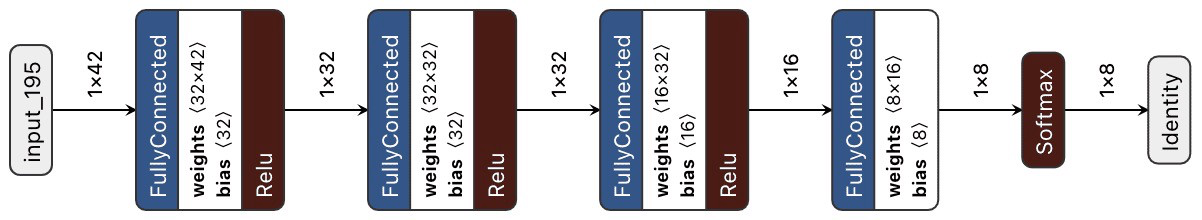

Using MediaPipe Arms is a profitable technique not solely when it comes to pace, but in addition in flexibility. MediaPipe already has a easy gesture recognition calculator that may be inserted into the pipeline. Nevertheless, we wanted a extra highly effective resolution with the power to rapidly change the construction and behavior of the recognizer. To take action and classify gestures, the customized neural community was created with 4 Absolutely-Linked layers and 1 Softmax layer for classification.

Determine 3: Scheme that reveals the construction of classification neural community

This straightforward construction will get a vector of 2D coordinates as an enter and provides the ID of the categorised gesture.

As an alternative of utilizing cumbersome segmentation fashions with a extra algorithmic recognition course of, a easy neural community can simply deal with such duties. Recognising gestures by keypoints, which is a straightforward vector with 21 factors` coordinates, takes a lot much less knowledge and time. What’s extra vital, new gestures may be simply added as a result of mannequin retraining duties take a lot much less time than the algorithmic method.

To coach the classification mannequin, dataset with keypoints` normalised coordinates and ID of a gesture was used. The numerical attribute of the dataset was that:

- 3 gestures with 300+ examples (fundamental gestures)

- 5 gestures with 40 -150 examples

All knowledge is a vector of x, y coordinates that comprise small tilt and completely different shapes of hand throughout knowledge assortment.

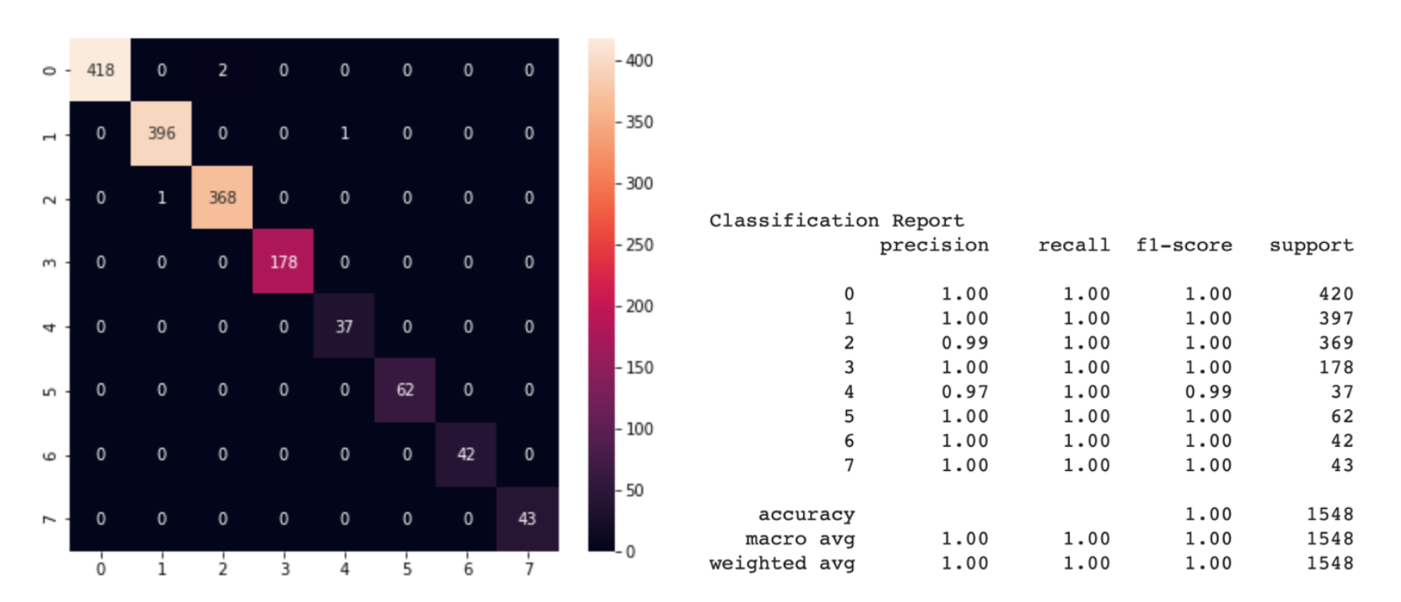

Determine 4: Confusion matrix and classification report for classification

We will see from the classification report that the precision of the mannequin on the check dataset (that is 30% of all knowledge) demonstrated virtually error-free for many lessons, precision > 97% for any class. Because of the easy construction of the mannequin, wonderful accuracy may be obtained with a small variety of examples for coaching every class. After conducting a number of experiments, it turned out that we simply wanted the dataset with lower than 100 new examples for good recognition of recent gestures. What’s extra necessary, we don’t have to retrain the mannequin for every movement in several illumination as a result of MediaPipe takes over all of the detection work.

Determine 5: [GIF] Take a look at that demonstrates how briskly classification community can distinguish newly educated gestures utilizing the knowledge from MediaPipe hand detector

From gestures to actions

To regulate a drone, every gesture ought to symbolize a command for a drone. Properly, essentially the most wonderful half about Tello is that it has a ready-made Python API to assist us try this with out explicitly controlling motors {hardware}. We simply have to set every gesture ID to a command.

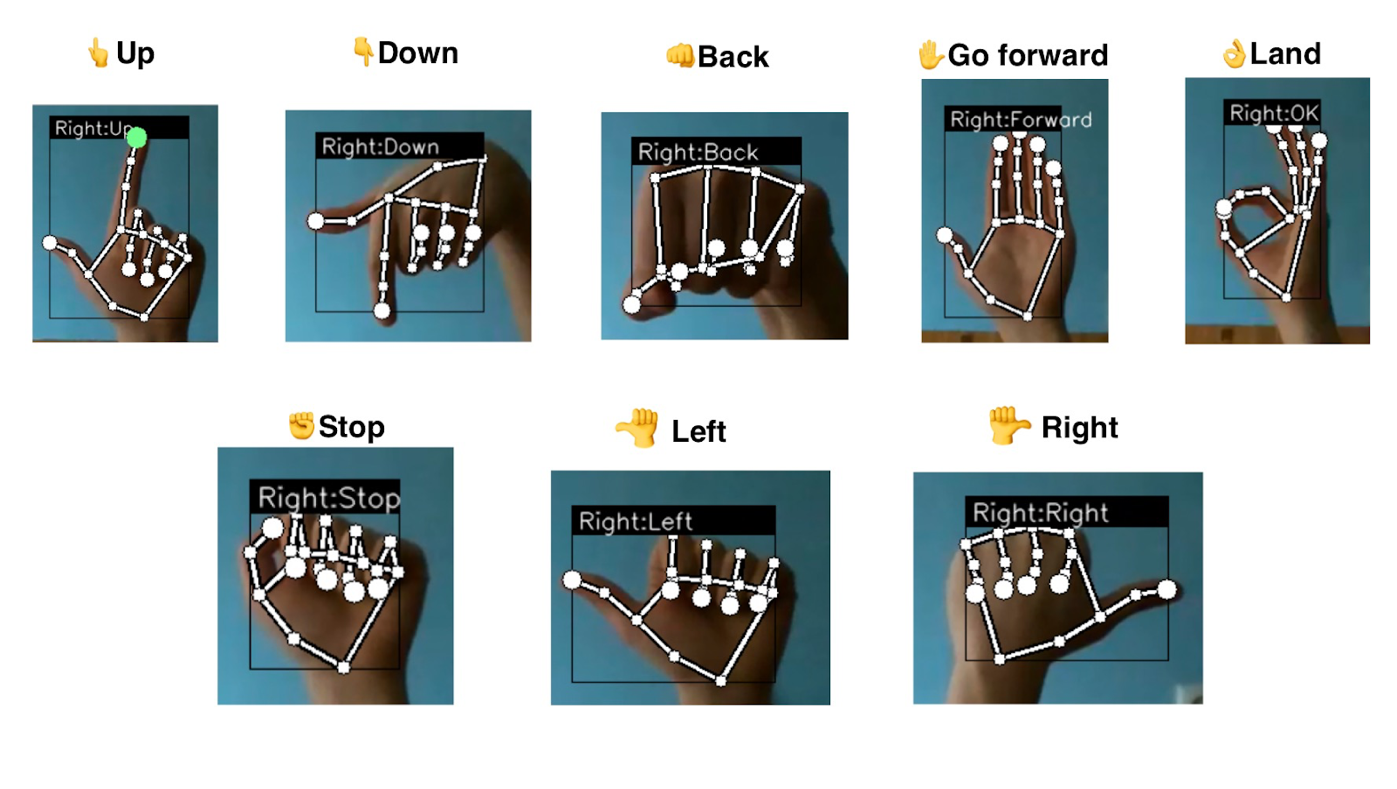

Determine 6: Command-gesture pairs illustration

Every gesture units the pace for one of many axes; that’s why the drone’s motion is easy, with out jitter. To take away pointless actions on account of false detection, even with such a exact mannequin, a particular buffer was created, which is saving the final N gestures. This helps to take away glitches or inconsistent recognition.

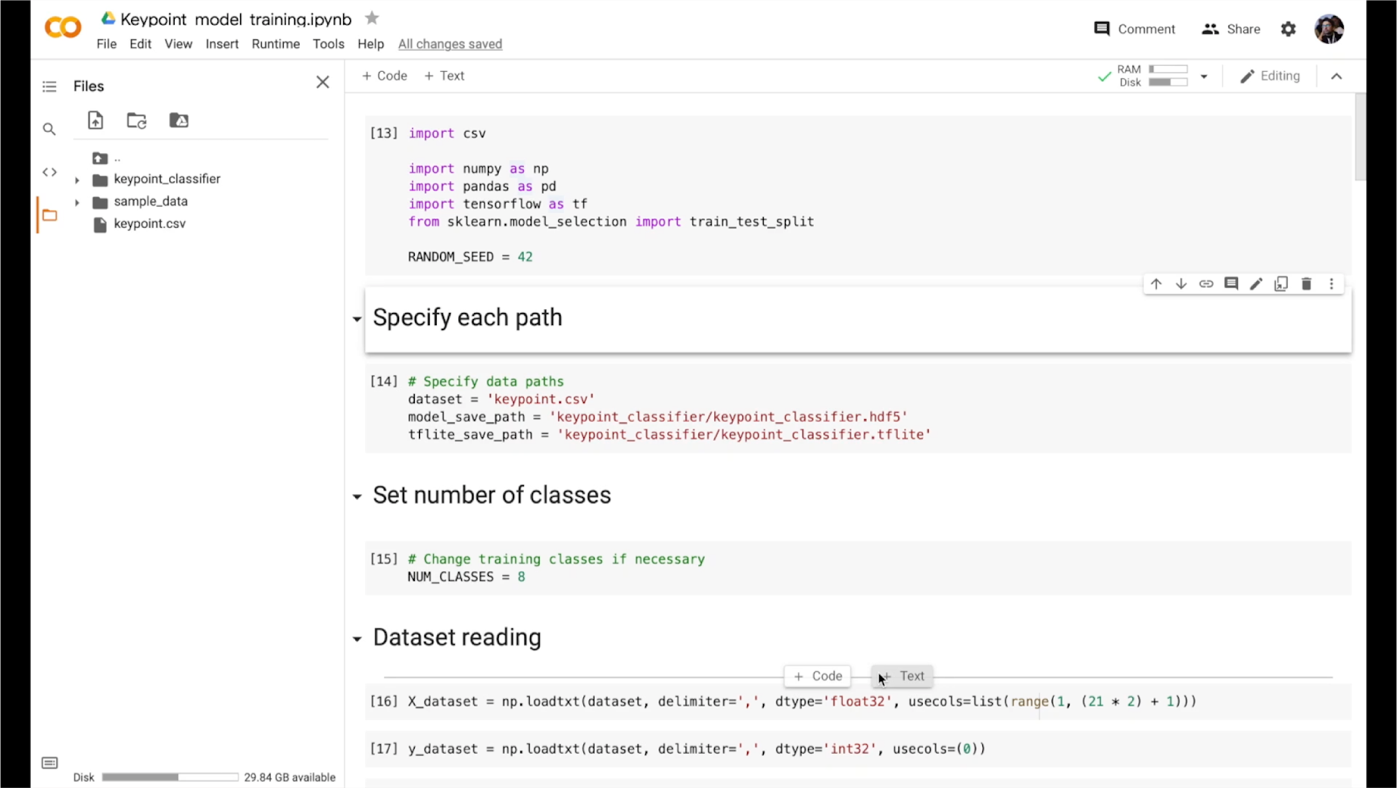

The elemental purpose of this venture is to show the prevalence of the keypoint-based gesture recognition method in comparison with classical strategies. To show all of the potential of this recognition mannequin and its flexibility, there may be a capability to create the dataset on the fly … on the drone`s flight! You may create your individual combos of gestures or rewrite an current one with out amassing huge datasets or manually setting a recognition algorithm. By urgent the button and ID key, the vector of detected factors is immediately saved to the general dataset. This new dataset can be utilized to retrain classification community so as to add new gestures for the detection. For now, there’s a pocket book that may be run on Google Colab or regionally. Retraining the network-classifier takes about 1-2 minutes on a typical CPU occasion. The brand new binary file of the mannequin can be utilized as an alternative of the previous one. It is so simple as that. However for the longer term, there’s a plan to do retraining proper on the cell machine and even on the drone.

Determine 7: Pocket book for mannequin retraining in motion

Abstract

This venture is created to make a push within the space of the gesture-controlled drones. The novelty of the method lies within the skill so as to add new gestures or change previous ones rapidly. That is made doable due to MediaPipe Arms. It really works extremely quick, reliably, and prepared out of the field, making gesture recognition very quick and versatile to adjustments. Our Neuron Lab`s group is worked up in regards to the demonstrated outcomes and going to strive different unbelievable options that MediaPipe gives.

We may also maintain monitor of MediaPipe updates, particularly about including extra flexibility in creating customized calculators for our personal fashions and decreasing limitations to entry when creating them. Since in the intervening time our classifier mannequin is outdoors the graph, such enhancements would make it doable to rapidly implement a customized calculator with our mannequin into actuality.

One other extremely anticipated function is Flutter assist (particularly for iOS). Within the unique plans, the inference and visualisation have been imagined to be on a smartphone with NPUGPU utilisation, however in the intervening time assist high quality doesn’t fulfill our requests. Flutter is a really highly effective software for fast prototyping and idea checking. It permits us to throw and check an thought cross-platform with out involving a devoted cell developer, so such assist is extremely demanded.

However, the event of this demo venture continues with accessible performance, and there are already a number of plans for the longer term. Like utilizing the MediaPipe Holistic for face recognition and subsequent authorisation. The drone will have the ability to authorise the operator and provides permission for gesture management. It additionally opens the best way to personalisation. Because the classifier community is easy, every person will have the ability to customise gestures for themselves (just by utilizing one other model of the classifier mannequin). Relying on the authorised person, one or one other saved mannequin might be utilized. Additionally within the plans so as to add the utilization of Z-axis. For instance, tilt the palm of your hand to regulate the pace of motion or top extra exactly. We encourage builders to innovate responsibly on this space, and to think about accountable AI practices comparable to testing for unfair biases and designing with security and privateness in thoughts.

We extremely imagine that this venture will inspire even small groups to do initiatives within the area of ML pc imaginative and prescient for the UAV, and MediaPipe will assist to deal with the constraints and difficulties on their means (comparable to scalability, cross-platform assist and GPU inference).

If you wish to contribute, have concepts or feedback about this venture, please attain out to [email protected], or go to the GitHub web page of the venture.

This weblog submit is curated by Igor Kibalchich, ML Analysis Product Supervisor at Google AI.

[ad_2]

![Figure 5: [GIF] Test that demonstrates how fast classification network can distinguish newly trained gestures using the information from MediaPipe hand detector](https://1.bp.blogspot.com/-P79noq5JrSM/YTqBpNUHNJI/AAAAAAAAKwI/Xp1WqAMKAJckPIX1CPUDfGOVn8evY_djQCLcBGAsYHQ/s0/figure%2B5.gif)